Samsung exec touts Galaxy S24 as true phone camera revolution

Samsung’s latest flagship phone comes with 112 AI models for enhanced camera functions

By Jo He-rimPublished : Jan. 23, 2024 - 15:23

SAN JOSE, California -- The Galaxy S24 series is the "ultimate collection" of Samsung Electronics' artificial intelligence technology for cameras, allowing users to become "expert photographers" with just a few intuitive taps on the screen, according to the tech giant's visual solutions chief.

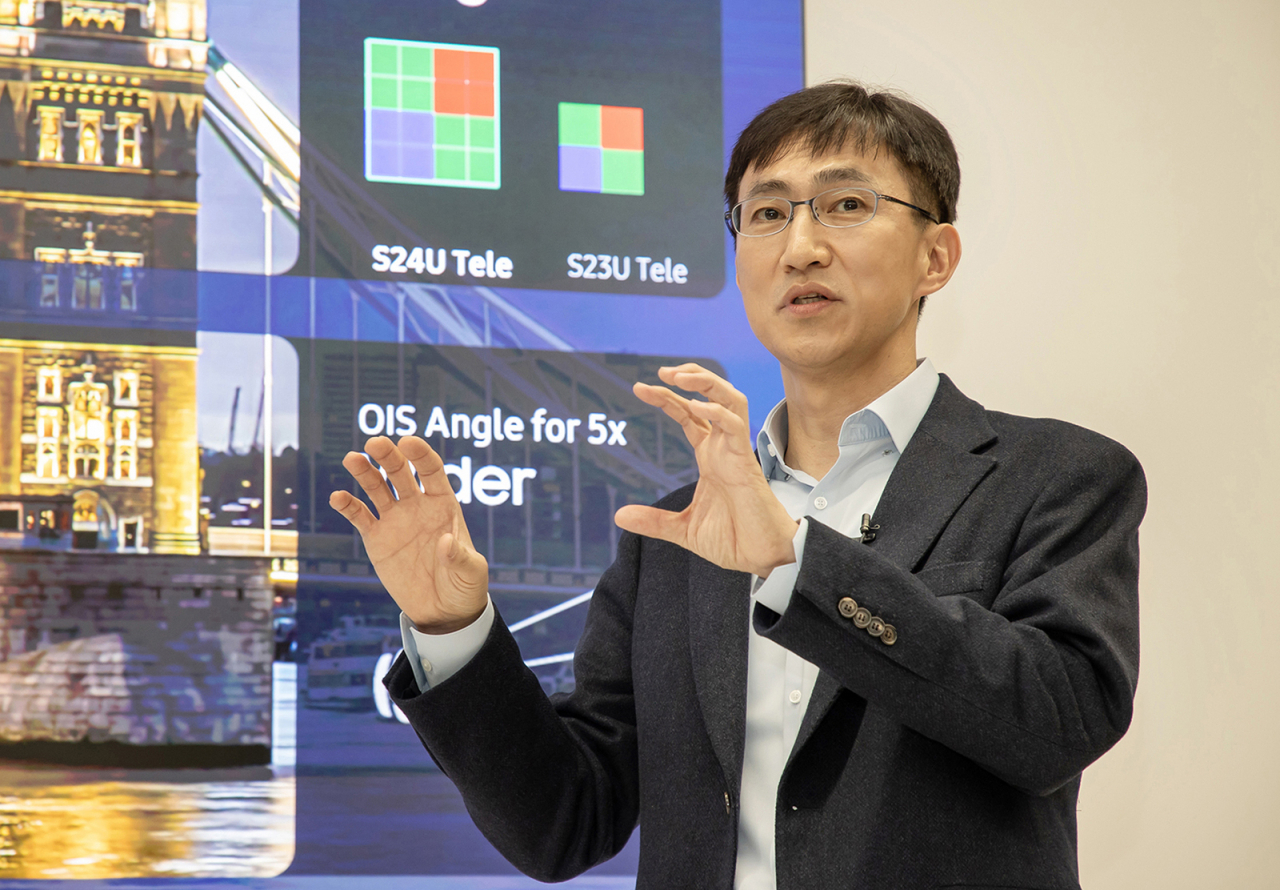

Samsung Electronics Executive Vice President Cho Sung-dae, who is in charge of the visual solutions team, explained how the latest Galaxy S24 series employs 112 visual AI models and a highly advanced neural processing unit for new camera features, at a press conference Thursday.

"The Galaxy S24 series starts and ends with AI. Dubbed the ProVisual Engine, it is the ultimate collection of Samsung's AI features for camera," Cho said, speaking at the press meeting held at Samsung Research America after the Galaxy Unpacked 2024 event that wrapped up the day before.

"The ProVisual Engine consists of largely three parts, the best AI model application, AI training with 'good' data sources and using the best energy processing unit and NPU."

Samsung launched its first AI-powered flagship smartphone, which includes enhanced camera features from high resolution and advanced zoom to smarter imaging editing.

For the latest model, Cho said that the smartphone maker has mainly focused on four aspects for a better visual experience for users: -- capture, view, edit and share.

The first time Samsung employed a neural processing unit to introduce an AI model in its mobile device was with the Galaxy S10 series in 2019. At that time, the company used four of the AI models. For the latest Galaxy S24, the number has increased to 112, allowing for clearer images, according to Cho.

"We now use 28 times more AI models. They work in the background from the moment the user presses the shutter button."

While the Galaxy S10 operated with an NPU that at 4 trillion to 5 trillion operations per second, the latest series now employs an NPU 13 times faster, according to the executive vice president.

With the faster model, operating more complex AI models is made possible. Samsung also introduced the Quad Tele System.

As to how the company employed a new 5x optical zoom lens instead of the 10x lens, Cho explained it is not a downgrade, but surely an upgrade, as it also uses the bigger 50-megapixel sensor that is used for a 1x camera.

The new optical zoom lens with a better sensor enables optical-quality performance at zoom levels from 2x, 3x and 5x up to 100x magnification, supported by the Adaptive Pixel Sensor, Cho added.

Cho also dismissed concerns that the AI camera will consume greater storage space and battery, thanks to its advanced technologies.

"We made a lot of efforts so that the size of files does not increase. For instance, by selecting 'motion photo,' we can reduce the size of the file by 40 percent when compared to the images made by the previous Galaxy smartphones."

For "Nightography," the company worked on more improvements in the past three years. With a wider optical image stabilizer, the blur in the Galaxy S24 series has been reduced and compensation for shaky hands has been enhanced.

For the Edit Suggestion feature, if the AI fails to recommend a revision the user can manually make changes via the app, Enhance-X.

With the better NPU and frame rate conversion technology, the tech giant was also able to enhance the Instant Slow-mo zoom feature, with AI generating images for smooth transition of the frames, Cho explained.

For the feature, the researchers sought to enable a zoom feature not only for space, but also for time. According to Cho, the name of the project to have slow motion features at the tip of one's finger was Spatial Temporal Zoom and Time Zoom.

The visual solutions chief expressed anticipation to introduce entirely new features in the future, based on new technologies that have yet to be released.

"We plan our product development road map, but we always make changes on the way because new technology keeps coming up. With the generative AI introduced, there are new scenarios (for product development) that we have not imagined before," Cho said.

“We are currently working on ways to utilize the generative images and videos, so I believe I’ll be able to present some new solutions that we have never imagined before.”