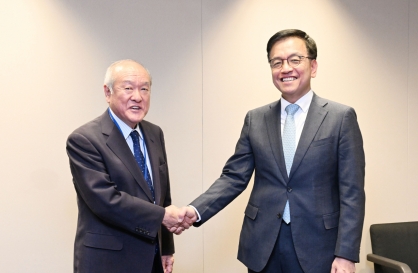

[Herald Interview] Glimpse into future of AI that talks fluently

Atlas Labs vows to challenge tech giants with AI-powered voice call application

By Shim Woo-hyunPublished : Oct. 25, 2020 - 16:01

While artificial intelligence is slowly entering everyday life, AI that speaks and understands human language flawlessly is still a work in progress for tech firms.

Google’s Duplex technology, for instance, has been allowing users to make reservations in restaurants automatically or arrange appointments, with the AI assistant making the phone call, since 2018.

South Korean telecommunications firm SKT earlier this month has added its self-developed voice assistant Nugu to its voice call application, while explaining future plans to add voice command-powered new features.

The existing solutions are still task-oriented as natural language processing models are not fully developed.

“However, the AI engines will eventually become able to handle more complex sentences and dialogues in the future,” said Atlas Labs CEO Robin Lyu in an interview with The Korea Herald.

Atlas Labs is a Korean startup that has developed a voice call application that utilizes an AI language model and speech-to-text technology.

The mobile-based application can automatically transcribe users’ conversations and have the text available to the users right after their calls end.

“There will be a lot more benefits that people can enjoy when AI engines can better understand the language that people use,” Lyu said.

For instance, people even without tech backgrounds will be able to ask their AI assistant to program their own webpages or platforms.

The overall market for natural language processing models, of course, is being led by giant tech companies.

Leading the global competition are Nvidia, Google and Microsoft.

These firms have the upper hand when developing the state-of-art language processing AI models, as they have substantial computing power with their cutting-edge supercomputers.

As neural network architectures of conversational AI models become larger, they require more computing power to process.

In Korea, Samsung Electronics used Google’s cloud-based Tensor Processing Unit -- a custom application-specific integrated circuit built specifically for machine learning -- to enhance its AI assistant Bixby. Naver has purchased Nvidia’s DGX A100 systems to build its own supercomputer for machine learning.

Although it may not have such computing power, Atlas Labs plans to make AI technology more relatable to the general public, which in return will provide datasets that can help make its language processing model more accurate.

“Atlas Labs has focused on the tremendous amount of language data that gets lost when calls are over, and decided to develop a solution that can collect them,” said Lyu.

And with its AI-powered voice call application that can record and collect the data will enable such data to be used as resources to further train the AI, he explained.

Atlas Labs is currently operating a closed-beta service. The company said it would officially launch the service early next year. The company added it would also roll out the service in Japan down the road.

Lyu said Atlas Labs’ solution is one of the applications that can fit into an ecosystem of cloud-based applications, wherein many users can benefit from. Lyu added that the company will work towards to develop or support cloud-based software ecosystem that functions through daily languages that people use.

By Shim Woo-hyun (ws@heraldcorp.com)

![[Kim Seong-kon] Democracy and the future of South Korea](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=644&simg=/content/image/2024/04/16/20240416050802_0.jpg&u=)

![[KH Explains] Hyundai's full hybrid edge to pay off amid slow transition to pure EVs](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=652&simg=/content/image/2024/04/18/20240418050645_0.jpg&u=20240418181020)

![[Today’s K-pop] Zico drops snippet of collaboration with Jennie](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=642&simg=/content/image/2024/04/18/20240418050702_0.jpg&u=)